Integration of color and gloss cues in material discrimination and identification

Functional benefits of neural adaptation (shapes)

Top-down effects in fMRI adaptation (faces)

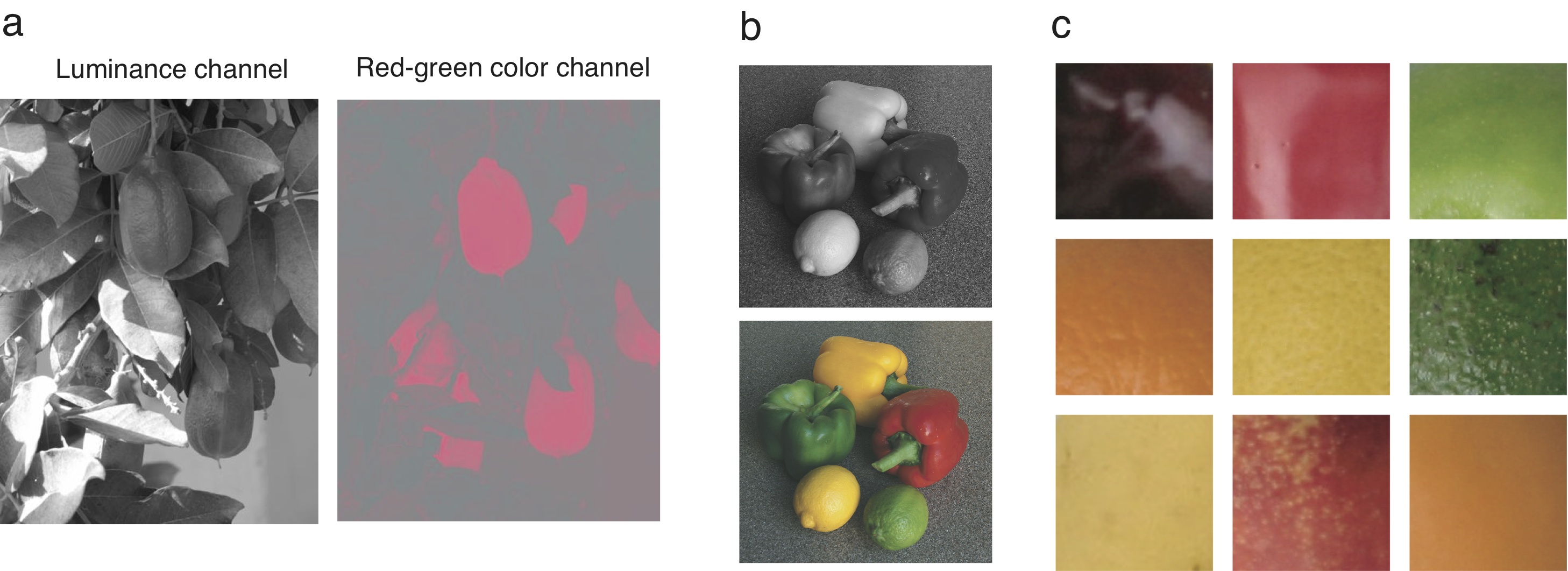

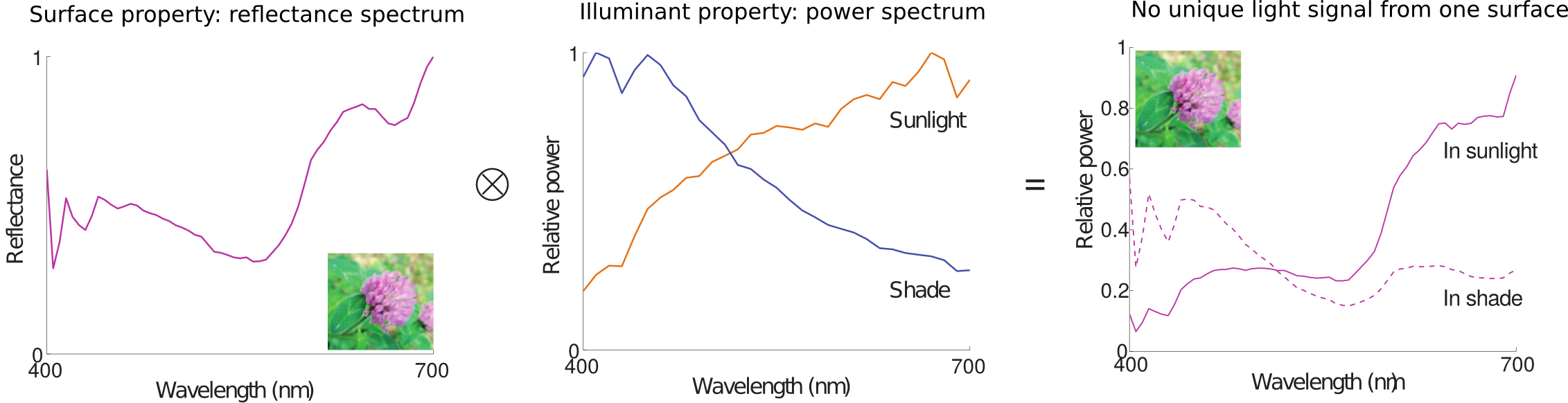

Prior knowledge affects the way we interpret incoming sensory signals, both based on long-term learning (memory colors), and short-term learning (statistical priors). In my own research, I have discovered that prior knowledge about object identity affects the way we perceive their colors (see e.g Olkkonen, Hansen, & Gegenfurtner, 2008 ). More recently, I found that prior knowledge acquired on the shorter term also affects color appearance in delayed color matches ( Olkkonen, McCarthy, & Allred, 2014).

The effect of long-term or short-term memory processes on color appearance are not explained by current models of color perception or memory, but fit well in a probabilistic inference framework based on a Bayesian ideal observer. A Bayesian observer estimates the external cause of an incoming sensory signal by combining the sensory evidence with prior information about the world. Together with Toni Saarela and Sarah Allred, we have implemented a Bayesian model observer that produces similar interactions between perceptual constancy and short-term memory for lightness that we observed recently in human observers for both lightness and hue (Olkkonen & Allred, 2014;

Olkkonen, Saarela, & Allred, 2016. We'd eventually like to implement this model in full-color scenes and test the model with a new, independent data set.

Back to top

Based on probability theory, it should be beneficial for human observers to combine information from multiple sources or "cues" when making perceptual estimates, for instance, how far an object is, or what its identity is. There is an extensive literature on cue integration in visual and multisensiry perception, which shows that human observers are often optimal or near-optimal in integrating information over multiple cues when making perceptual estimates. This means that the visual system is aware of the uncertainty of each individual cue, and weights it accordingly. This weighting leads to decreased variance in the perceptual estimate, but sometimes also to biases. For instance, in the ventriloquist effect, sound localization is biased by the visual cue (the puppet) because vision is more reliable than audition (see e.g. Alais & Burr, 2004).

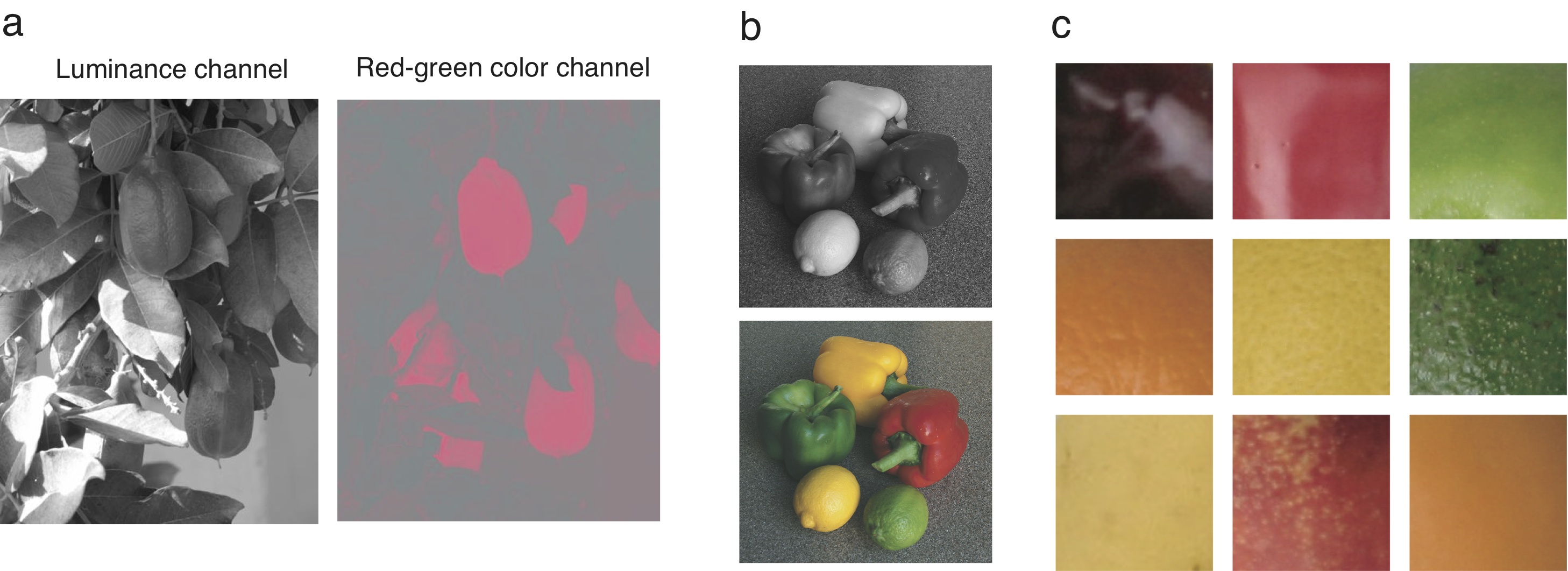

Although cue integration has been studied in many visual domains, we know much less about how different surface cues are integrated -- for instance, color and gloss. Yet, all surfaces and objects have more than one material property; they are either matte or glossy, rough or smooth, achromatic or colored, flexible or stiff. To understand how cues from surface material are used for object discrimination and identification, it is important to vary more than one material property in such tasks. I am currently running a study together with my colleague Toni Saarela where we study the integration of color and gloss cues in material discrimination and identification. In the first study, presented at the 2017 Vision Sciences Society meeting (Saarela & Olkkonen, 2017 ), we showed that observers near-optimally combined cues from color and gloss when making material discriminations in a psychophysical discrimination task. In a second, ongoing study, we asked whether observers combine information over color and gloss when making judgments about ripeness. For this, we designed a fruit space where green and matte surfaces indicate raw fruit, and red and glossy surfaces indicate ripe fruit. We measured behavioral integration with a psychophysics experiment, and neural integration with an fMRI adaptation paradigm. I presented preliminary results at the Fall Vision Meeting in Washington DC, in September 2019.

On a more functional level, it has been suggested that adaptation serves to improve discriminability of stimuli, or to decorrelate neural signals (see e.g. Barlow & Foldiak, 1989). At the level of individual neurons, this would manifest as the sharpening of neuronal tuning curves (increased selectivity), and at the level of populations, the sharpening of population tuning curves (selectivity for the whole population). There is some evidence for the sharpening hypothesis from electrophysiology and fMRI for simple stimulus features (e.g. orientation), but little behavioral evidence for other stimuli than color (which is a salient exception), and no fMRI evidence for more complex stimuli.

Nevertheless, the sharpening hypothesis is an attractive one, and despite the lack of evidence, it is still favored by some researchers. As there are such clear benefits for color discrimination from color adaptation, and some evidence for faces, we wanted to found out whether we see such benefits for object shape both from behavioral discrimination thresholds and from fMRI pattern discriminability. In order to study the effect of adaptation on shape representations, we used a new toolbox developed by Toni Saarela for generating parametric 3D radial frequency patterns. The Toolbox runs in Octave/Matlab and is available under an open source license at gitHub. See our recently published paper for the results!

Back to top